Testing Database Access With SpecFlow and NDbUnit and LocalDb

I know that some people will hate me for mixing the words testing database with gherkin all in one sentence, but I’ve found that it’s a quite nice combination. Gherkin’s table feature (SpecFlow in my case) allow for very nice test definitions, with NDbUnit it is quite simple to populate the database, and thanks to LocalDb the tests are beautifully portable.

Why unit test database?

Wait, I never said that these are going to be unit tests right? More of integration tests. Otherwise how would you test repositories etc. without actual database?

My use case

A long time ago I implemented a small library called r2rml4net, which implements the R2RML specification. R2RML is a RDF language, in which it is possible to map data from relational databases to RDF. What is RDF is completely out of scope of this post. There’s a good introduction here.

In my project I use my tool to convert an SQL database of public transport brochures I own to RDF for publishing over the web. I wanted automatic tests for those mappings to ensure that the results are correct. Did I mention I have a lot of those brochures? My wife almost hates me for it.

Automatic database tests with SpecFlow

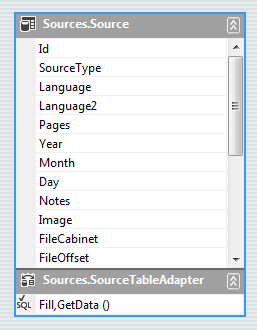

Most of the data sits in a table called Sources.Source. It’s called that, because it holds brochures but also book and

magazine issues.

What r2rml4net does is convert a single row like

+----+------------+----------+-----------+-------+-------------------------+

| Id | SourceType | Language | Language2 | Pages | FolderName |

+----+------------+----------+-----------+-------+-------------------------+

| 1 | folder | tr | en | 2 | Türkkar City Angel E.D. |

+----+------------+----------+-----------+-------+-------------------------+

Into some RDF data. Here it’s turtle, which will be important in a moment.

1 2 3 4 5 6 7 8 9 10 11 12 | |

Defining the test case

Each test is structured int test same way. First I define the table(s) contents similarly to the ASCII art above and in

the Then clauses I write a SPARQL query, which matches the expected result with the output data.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | |

Notice how similar the SPARQL syntax is to that of turtle. In simple English the above means:

- return a boolean value (

ASK) - stating that

graph <http://data.wikibus.org/graph/folder/1/imported> - contains the data from inside

{ the curly braces }

Right, enough RDF and SPARQL. Let’s focus on testing the database.

Preparing the database for tests

It was important to me that each test case runs a fresh database with only the data defined in that test case alone. This

is when I found NDbUnit, which exposes an INDbUnitTest interface for manipulating database contents with

old skool DataSets. Here’s the code I execute at the beginning of each test scenario, which recreates and initializes

an LocalDb instance.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | |

As you see I had to create a DataSet to be able to load data in each test case. More on that later. And of course I had

to create the dataset Wikibus.xsd to match the database structure:

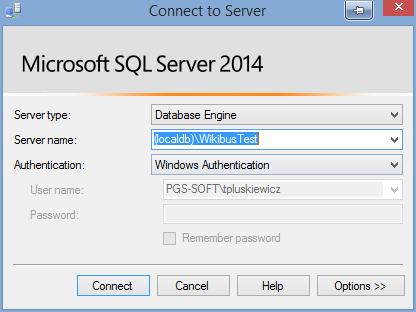

The SqlLocalDbApi is also of great help. Before I would first connect to master to create the

database for tests. Without that it’s not possible to connect to SQL Server and so I had to keep two connection strings.

Now there is only one and the code above ensures that the instance is available and recreated every time. The only

requirement is that the InstanceName matches what you have in the connection string.

1 2 3 | |

It even works out of the box on AppVeyor and doesn’t care whether developers have their SQL instance named

that way or another! All you need is SQL Server installed (I think it’s 2012 or newer). Oh and did I mention that you can

connect to this database using SQL Server Management Studio? ![]()

Populating database with data

With that ready I can now fill the database with some data. Here’s how I bind the Given step above to a SpecFlow method

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

That’s actually quite simple. The only hard and boring part is to convert the weak Table into a DataSet object for

loading. It’s simple copying the table values for each row. As long as the header names match column names all is dandy.

The source code can be viewed on GitHub of course.

Summary

And that’s it. Now I can proceed with the When and Thens to run the code I want tested and with every test case I am

starting with a blank database so that each test is guaranteed to be independent from any other.